This page was recently revised as of 18/11/10, see Revised Physioloigcal Computing FAQ for more information.

1. What is physiological computing?

Physiological Computing (PC) is a term used to describe any computing system that uses real-time physiological data as an input stream to control the user interface. The most basic sort of PC is one that records a signal such as heart rate and displays it to the viewer via the screen. Other systems, such as Brain Control Interfaces (BCI), take a stream of physiological data and convert it into input control at the interface, e.g. to move a cursor or select a command. Other types of PC simply monitor physiology in order to assess psychological states, which is used to trigger real-time adaptation. For example, if the system detects high blood pressure, it may assume the user is experiencing high frustration and offer help. The applications for PC range from adaptive automation in an aircraft cockpit to computer games where brain activity is used to initiate particular commands.

2. How does physiological computing work?

All PC systems work on the basis of a biocybernetic loop. This loop describes the flow of information from data collection to analysis and translation to the selection of a particular response at the interface. The main purpose of the biocybernetic loop is to translate patterns of physiological activity into meaningful interaction at the computer interface.

This is easy to understand at a conceptual level. For example, this loop could describe how eye movements may be captured and translated into up/down and left/right commands for cursor control. The same flow of information can be used to represent how changes in electrocortical activity (EEG) of the brain can be used to control the movement of an avatar in a virtual world or to activate/deactivate system automation. With respect to an affective computing application, a change in physiological activity, such as increased blood pressure, may indicate higher levels of frustration and the system may respond with help information. In all cases, the same cycle of collection-analysis-translation-response is apparent.

However, the low level detail of the loop involves many variables and signal processing elements. This is why the development of physiological computing is a multidisciplinary field involving contributions from psychology, neuroscience, engineering, & computer science.

3. OK.. Give me some examples.

Researchers became interested in physiological computing in the 1990s. A group based at NASA developed a system that measured user engagement (whether the person was paying attention or not) using the electrical activity of the brain. This measure was used to control an autopilot facility during simulated flight deck operation. If the person was paying attention, they were allowed to use the autopilot; if attention lapsed, the autopilot was switched off – therefore, prompting the pilot into manual control in order to re-engage with the task. The technology used to develop this system was applied to biofeedback games to treat children with Attention Deficit and Hyperactivity Disorder (ADHD). In this particular case, ADHD children were motivated to produce a desirable pattern of brain activity (that alleviated their symptoms) when they were playing the game. Read more about this strand of research here.

Physiological computing was also used by MIT Media Lab during their investigations into affective computing. These researchers were interested in how psychophysiological data could represent the emotional status of the user – and enable the computer to respond to user emotion. For example by offering help if the user was irritated by the system. Brain-Computer Interfaces (BCI) were originally developed to aid communication and mobility for people with disability. These systems, which convert a range of physiological data into input control commands, are currently being developed for healthy users – in order to enhance productivity or to deliver new forms of gaming experience.

Physiological computing has been applied to a range of software application and technologies, such as: robotics (making robots aware of the psychological status of their human co-workers), telemedicine (using physiological data to diagnose both health and psychological state), computer-based learning (monitoring the attention and emotions of the student) and computer games (changing game difficulty to ensure engagement).

4. Is the Wii an example of physiological computing?

In a way. The Wii monitors movement and translates that movement into a control input in the same way as a mouse. Physiological computing, as defined here, is quite different. First of all, these systems focus on hidden psychological states rather than observed physical movements. Secondly, the user doesn’t have to move or do anything to provide input to a physiological computing system. What physiological computing does is monitor “hidden” aspects of behaviour.

5. What about Kinnect?

This is another system that detects movement using a camera-based motion system. According to our definition, physiological computing taps the patterns of activity directly from the nervous system. Motion-detection systems work by analysing overt and observable movements. Obviously those movements are derived from nervous system activity, but Kinnect doesn’t tap that data source directly. Of course, with both Wii and Kinnect, it may be possible to infer covert psychological states from the way in which people move; for example, vigorous and energetic movement may indicate increased alertness or positive mood. See this recent wired article for more on this possibility.

6. Isn’t physiological computing just like a Biofeedback system?

It could be argued that biofeedback technology is the parent of physiological computing. A large overlap exists between systems that use physiology to inform adaptation at the interface and conventional biofeedback. Conventional biofeedback aids self-regulation by providing a dynamic representation of physiological activity in real-time. Certain forms of affective computing and systems designed to control automation function in an identical way, with changes at the interface representing a cue for improved self-regulation, i.e. if the computer turns the screen red, it means I’m stressed and need to relax. In addition, training on a BCI can require a biofeedback regime in order to train the user in self-regulation before turning them loose on the interface.

7. I’m confused, how many different kinds of physiological computing system are there?

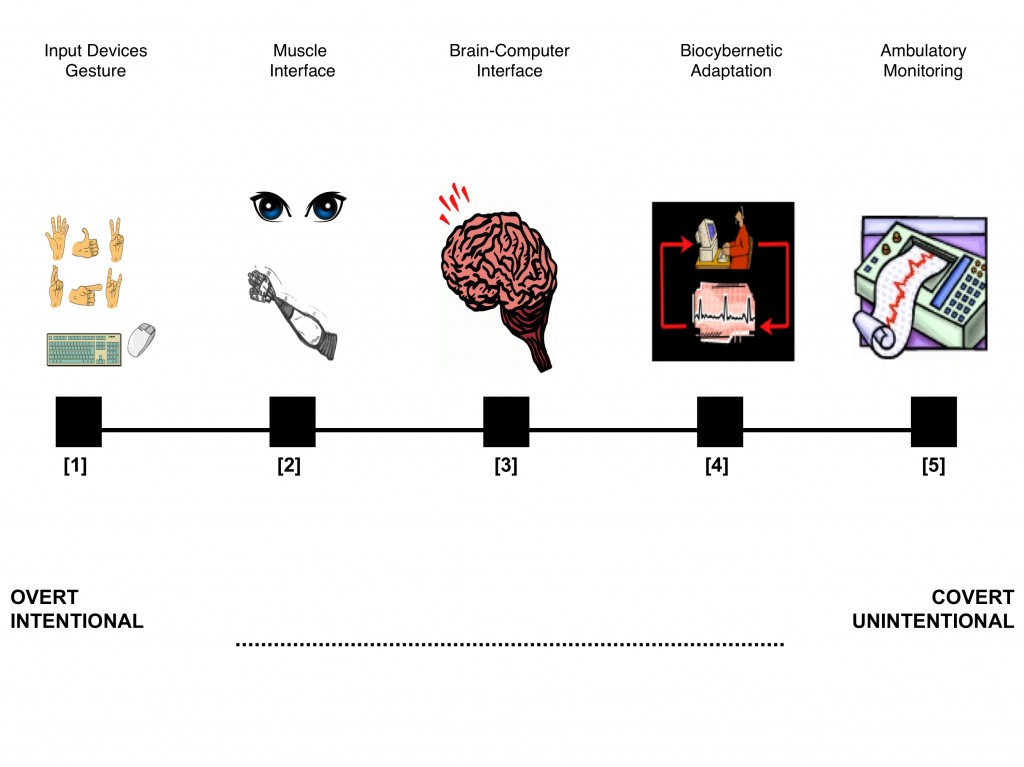

We have suggested a four-group classification to describe the different kinds of physiological computing system. Or to be accurate, we have suggested a continuum of physiological computing systems because we want to emphasise that the enormous potential for mash-ups and hybrid systems between these four categories. This continuum is represented in the figure below. Starting on the left, we have conventional input devices [1], including gestures and motion detection. Muscle interfaces [2] describes systems that use physiological activity from overt muscular movement to drive the interaction, e.g. eye movement. The third grouping is BCI [3] where control of the interaction is driven from brain or body activity (several researchers have explored autonomic responses as well as brain activity to serve this function). These three categories of device are all concerned with input control at the interface, e.g. cursor movement, command selection. They generally represent an intention on the part of the user to manipulate some aspect of the interface. The fourth category of Biocybernetic Adaptation [4] represents a ‘wiretapping’ approach whereby spontaneous changes in psychophysiology are used to inform software adaptation. This category includes all aspects of affective computing where the goal of the system is to increase awareness of the psychological state of the user. It also includes the monitoring of mental workload in the case of adaptive automation. All these systems require some kind of ambulatory system to capture physiology in applied environments, i.e. unobtrusive and robust wearable sensors. The final category of ambulatory monitoring [5] describes how the results of this pervasive monitoring can be made available to the user for self-learning and self-diagnosis. For example, physiology could be monitored over days and weeks to study the impact of a diet or exercise regime. This kind of personal informatics could be applied to telemedicine application whereby patient data is viewed by a physician or presented to the user for the purposes of self-diagnosis. Ambulatory monitoring technology underpins all other examples of physiological computing but represents an application domain in its own right.

8. What can a physiological computer allow me to do that is new?

Physiological computing has the potential to change the way in which we communicate with computers. BCI offers new modes of interaction and the novelty of making things happen via “handsfree” mental activity. Biocybernetic adaptation provides the computer with a digital representation of the psychological state of the user. This information can be used to adapt the response of the software in a timely and intuitive fashion – to offer help when needed, to make the game more challenging when the player is bored, to play relaxing music if the person is stressed. This kind of ‘intelligent’ adaptation paves the way for ‘smart’ technology that learns not only when to adapt itself, but how to personalise the process of adaptation to that particular user. In addition, all these systems may be combined into hybrids with multiple functions – BCI interface combined with biocybernetic adaptation.

9. Will these systems be able to read my mind?

Psychophysiological measures can provide an indication of a person’s emotional status. For instance, it can measure whether you are alert or tired or whether you are relaxed or tense. There is some evidence that it can distinguish between positive and negative mood states. The same measures can also capture whether a person is mentally engaged with a task or not. Whether this counts as ‘reading your mind’ or not depends on your definition. The system would not be able to diagnose whether you were thinking about making a grilled cheese sandwich or a salad for lunch.

10. Can I buy a physiological computer?

You can buy systems that use psychophysiology for human-computer interaction. For example, a number of headsets are on the market that have been developed by Emotiv and Neurosky to be used as an alternative to a keyboard or mouse. At the moment, commercial systems fall mainly into the BCI application domain. There are also a number of biofeedback games that also fall into the category of physiological computing, such as The Wild Divine .

11. What do you need in order to create a physiological computer?

In terms of hardware, you need psychophysiological sensors (such as a GSR sensor or heart rate monitoring apparatus or EEG electrodes) that are connected to an analogue-digital converter. These digital signals can be streamed to a computer via a wired (e.g. serial cable) or wireless connection (e.g Bluetooth). On the software side, you need an API or equivalent to access the signals and you’ll need to develop software that converts incoming physiological signals into a variable that can be used as a potential control input to an existing software package, such as a game. Of course, none of this is straightforward because you need to understand something about psycho-physiological associations (i.e. how changes in physiology can be interpreted in psychological terms) in order to make your system work.

12. What is it like that I have experienced?

That’s hard to say because there isn’t very much apparatus like this generally available. If you’ve ever worn ECG sensors in either a clinical or sporting setting, you’ll know what it’s like to see your physiological activity “mirrored” in this way. That’s one aspect. The closest equivalent is biofeedback, where physiological data is represented as a visual display or a sound in real-time, but biofeedback is relatively specialised and used mainly to treat clinical problems.

13. A lot of the technology involved sounds ‘medical’. Is this something hospitals would use?

The sensor technology is widely used by medical professionals to diagnose physiological problems and to monitor physiological activity. Physiological computing represents an attempt to bring this technology to a more mainstream population by using the same monitoring technology to improve human-computer interaction. In order to do this, it’s important to move the sensor technology from the static systems where the person is tethered by wires (as used by hospitals) to mobile, lightweight sensor apparatus that people can wear comfortably and unhindered as they work and play.

14. Who is working on this stuff?

Physiological computing is inherently multidisciplinary. The business of deciding which signals to use and how they represent the psychological state of the user is the domain of psychophysiology (i.e. inferring psychological significance from physiological signals). Real-time data analysis falls into the area of signal processing that can involve professionals with backgrounds in computing, mathematics and engineering. Designing wearable sensor apparatus capable of delivering good signals outside of the lab or clinical environment is of interest to people working in engineering and telemedicine. Deciding how to use psychophysiological signals to drive real-time adaptation is the domain of computer scientists, particularly those interested in human-computer interaction and human factors.

15. What about the privacy of my data?

Good question. Physiological computing inevitably involves a sustained period of monitoring the user. This information is, by definition, highly sensitive. An intruder could monitor the ebb and flow of user mood over a period of time. If the intruder could access software activity as well as physiology, he or she could determine whether this web site or document elicited a certain reaction from the user or not. Most of us regard our unexpressed emotional responses as personal and private information. In addition, data collected via physiological computing could potentially be used to indicate medical conditions such as high blood pressure or heart arrhythmia. Privacy and data protection are huge issues for this kind of technology. It is important that the user exercises ultimate control with respect to: (1) what is being measured, (2) where it is being stored, and (3) who has access to that information.

16. Where can I find out more?

There are a number of written and online sources regarding physiological computing. Almost all have been written for an academic audience. Here are a number of articles for further reading:

- Allanson, J., 2002. Electrophysiologically interactive computer systems. IEEE Magazine.

- Allanson, J., Fairclough, S.H., 2004. A research agenda for physiological computing. Interacting With Computers 16, 857-878

- Allison, B.Z., Wolpaw, E.W., Wolpaw, J.R., 2007. Brain-computer interface systems: progress and prospects. Expert Review of Medical Devices 4, 463-474.

- Fairclough, S. H. 2009. Fundamentals of physiological computing. Interacting with Computers, 21, 133-145.

- Gilleade, K. M., Dix, A., & Allanson, J., 2005. Affective videogames and modes of affective gaming: Assist me, challenge me, emote me. Paper presented at the Proceedings of DiGRA 2005.

- Hettinger, L.J., Branco, P., Encarnaco, L.M., Bonato, P., 2003. Neuroadaptive technologies: applying neuroergonomics to the design of advanced interfaces. Theoretical Issues in Ergonomic Science 4, 220-237.

- Morris, M., Guilak, F., 2009. Mobile Heart Health: Project Highlight. IEEE Pervasive Computing 8, 57-61.

- Picard, R. W., & Klein, J., 2002. Computers that recognise and respond to user emotion: Theoretical and practical implications. Interacting With Computers, 14, 141-169.

- Picard, R.W., 2003. Affective computing: challenges. Interacting With Computers 59, 55-64.

- Pfurtscheller, G., Allison, B.Z., Brunner, C., Bauemfeind, G., Solis-Escalante, T., Scherer, R., Zander, T.O., Mueller-Putz, G., Neuper, C., Birbaumer, N., 2010. The hybrid BCI. Frontiers in Neuroscience 4, 1-11.

- Scerbo, M.W., Freeman, F.G., Mikulka, P.J., 2003. A brain-based system for adaptive automation. Theoretical Issues in Ergonomic Science 4, 200-219.

- Wilson, G.F., Russell, C.A., 2007. Performance enhancement in an uninhabited air vehicle task using psychophysiologically determined adaptive aiding. Human Factors 49, 1005-1018.