First of all, apologies for our blog “sabbatical” – the important thing is that we are now back with news of our latest research collaboration involving FACT (Foundation for Art and Creative Technology) and international artists’ collective Manifest.AR.

To quickly recap, our colleagues at FACT were keen to create a new commission tapping into the use of augmented reality technology and incorporating elements of our own work on physiological computing. Our last post (almost a year ago now to our shame) described the time we spent with Manfest.AR last summer and our show-and-tell event at FACT. Fast-forward to the present and the Manifest.AR piece called Invisible ARtaffects opened last Thursday as part of the Turning FACT Inside Out show.

During the initial period, we’d had a lot of discussion about blending AR and physiological computing. Our role was firstly to provide some technical input to the concepts produced by the artists, but as often happens when enthusiastic people get together, our collaboration became more about the development of a shared and realistic vision for the pieces. When you put academics and artists together, developing a shared vision is an interesting exercise. I can only speak for ourselves but part of our job was almost to be the party-poopers who said “no, we can’t do this because it won’t work.” Another (much more rewarding) part of our role was to have an input for new types of interactive experiences as they were in the process of being developed.

Unfortunately funding issues prevented the show being realised in it’s original glory where we would have 3-4 pieces of interactive art that could be mediated by psychophysiological signals. However, the concepts for all the pieces are represented in the Invisible ARtaffects show and I’ll walk through each with respect to the physiological computing aspects.

The first I’d like to describe is John Craig Freeman‘s “EEG AR: Things We Have Lost.” This is an elegant piece where John collected interview data on the streets of Liverpool by asking the public one simple question – “what have you lost?” This question prompted a series of answers, some fairly trite (I lost my keys), some humorous (I lost my hair) and some very moving (I lost my wife). John then proceeded to use these answers to create a series of augments.

John was working with a NeuroSky EEG unit and using the standard ‘meditation’ scores from the system in order to make these various augment materialise on a phone or tablet. We’ve always build physiological computing systems according to a rationale where signals create meaningful interaction where changes at the interface resonate with physiological self-regulation, e.g. screen goes red for anger and blue for calm. From speaking to Craig and his colleague Will Pappenheimer, it was obvious that our artist collaborators were using the EEG in a different way in order to generate ‘chance’ or ‘random’ forms of interaction. In other words, wearing the neurosky and relaxing would provoke a random augment associated with an answer to the question “what has you lost?” Here is a 2005 conference paper that describes this approach in the context of biofeedback. Speaking personally, I understand the motivation behind using EEG as a random number generator and for a performance piece but struggle to see the same approach working as an interaction dynamic that informs and enriches the experience of a user.

Concept Image for EEG AR: Things We Have Lost

Sander Veenhoof developed an AR concept that was close to our hearts (quite literally) and any other academic attends a lot of conferences. His idea was to monitor the engagement of an audience member during a presentation and to introduce augments in order to provoke an increase of attention if they experience boredom. Hopefully the speaker behind the podium has no awareness of how many augments are being activated during their talk; the idea of this system being active during one of my undergraduate lectures fills me with dread.

Concept Image for Human Sensors

The physiological computing component behind “I Must Be Seeing Things” by John Cleater is similar to the EEG AR piece in that the person must relax in order for an augment to materialise before their eyes. What is interesting about John’s piece is that he is using AR combined with psychophysiological regulation to enhance and adapt an actual physical object. This piece works by the person viewing a book of sketched objects via a phone or tablet – as the person relaxes, an augmented object fills out the blank spaces of the sketched object as an overlay. I liked the idea of materialising a virtual object in this way and it’s a neat example of mixed reality with a physiological computing component.

I Must Be Seeing Things

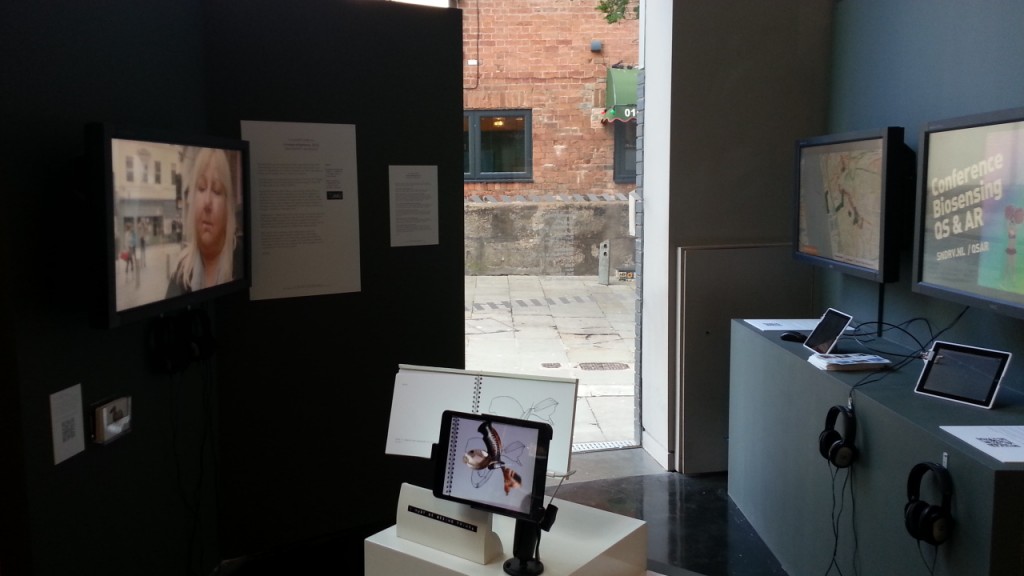

All the pieces described so far are only available as concepts, screenshots, animations and movies in the FACT show, Biomer Skelters by Will Pappenheimer and Tamiko Thiel is different in the sense that it is available in an interactive form as part of the show. The central concept of Biomer Skelters is to create virtual plants in an urban space and therefore to repopulate the lost vegetation of the city.

Concept Image for Biomer Skelters

Concept Image for Biomer Skelters

What Will and Tamiko have created is a mixed-reality experience where the person can walk the streets of city, creating virtual vegetation like a trail of breadcrumbs, which can be viewed on a smartphone or tablet. Biomer Skelters incorporates a physiological computing component that Will created working with his students at Pace University. As the person walks, they see a representation of their heart rate. Naturally heart rate tends to increase as people walk, particularly if they walk fast and so the idea is that the person must walk whilst maintaining a relatively low heart rate in order to maximise the number of plants created in their wake. Why is maximising the number of plants important? Because there is a competitive element to the piece – Will and Tamiko have designed the software to spawn two types of plants: native species or invasive tropical species and participants play as two individuals or two teams where the objective is to “out plant” one another. The number of virtual plants in both cases can be viewed “in situ” (see image below) or on a map.

Screen shot of Biomer Skelters

Screen shot of Biomer Skelters

Biomer Skelters incorporates several aspects that (to my knowledge) have not been incorporated before. There is a mixed-reality concept using a camera view, a physiological computing component and geo-located game mechanic linked to biofeedback. The tests we have run so far shows that the code holds up and the system works well, but much depends on having a good quality smartphone.

We really enjoyed working on these pieces, there are lots of things here to follow up, both in terms of interactive art and AR-based games.

Pingback: Manifest.AR Exhibit Video | Physiological Computing

Pingback: Oggcamp, GSR and Horror Games | Just Kiel