I’d like to begin the new year on a philosophical note. A lot of research in physiological computing is concerned with the practicalities of developing this technology. But what about the conceptual implications of using these systems (assuming that they are constructed and reach the marketplace)? At a fundamental level, physiological computing represents an extension of the human nervous system. This is nothing new. Our history is littered with tools and artifacts, from the plough to the internet, designed to extend the ‘reach’ of human senses capabilities. As our technology becomes more compact, we become increasingly reliant on tools to augment our cognitive capacity. This can be as trivial as using the address book on a mobile phone as a shortcut to “remembering” a friend’s number or having an electronic reminder of an imminent appointment. This kind of “scaffolded thinking” (Clark, 2004) represents a merger between a human limitation (long-term memory) and a technological solution, we’ve effectively subcontracted part of our internal cognitive store to an external silicon one. Andy Clark argues persuasively in his book that these human-machine mergers are perfectly natural consequence of human-technology co-evolution.

If we use technology to extend the human nervous system, does this also represent a natural consequence of the evolutionary trajectory that we share with machines? It is one thing to delegate information storage to a machine but granting access to the central nervous system, including the inner sanctum of the brain, represents a much more intimate category of human-machine merger.

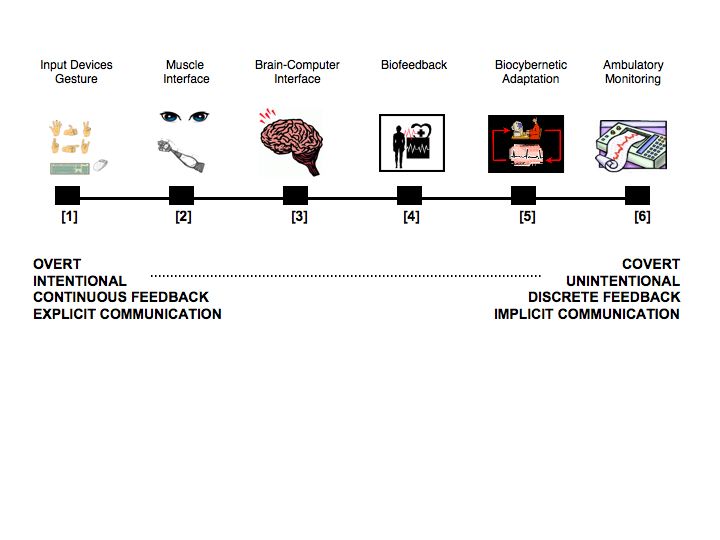

In the case of muscle interfaces, where EMG activity or eye movements function as proxies of a mouse or touchpad input, I feel the nervous system has been extended in a modest way – gestures are simply recorded at a different place, rather than looking and pointing, you can now just look. BCIs represent a more interesting case. Many are designed to completely circumvent the conventional motor component of input control. This makes BCIs brilliant candidates for assistive technology and effective usage of a BCI device feels slightly magical – because it is the ultimate in remote control. But like muscle interfaces, all we have done is create an alternative route for human-computer input. The exciting subtext to BCI use is how the user learns to self-regulate brain activity in order to successfully operate this category of technology. The volitional control of brain activity seems like an extension of the human nervous system in my view (or to be more specific, an extension of how we control the human nervous system), albeit one that occurs as a side effect or consequence of technology use.

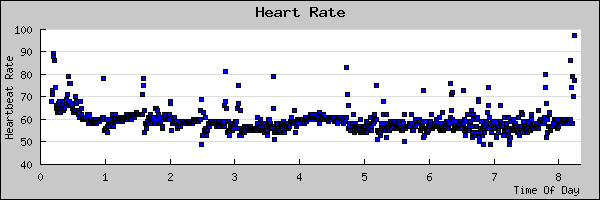

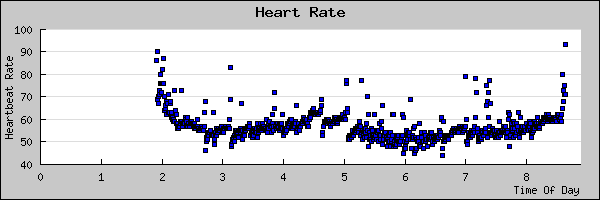

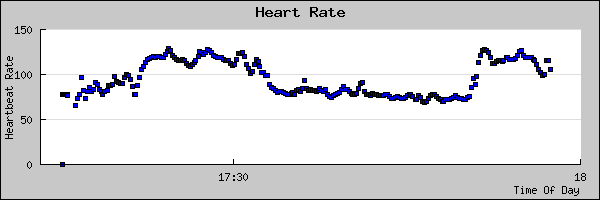

Technologies based on biofeedback mechanics, such as biocybernetic adaptation and ambulatory monitoring, literally extend the human nervous system by transforming a feeling/thought/experience that is private, vague and subjective into an observable representation that is public, quantified and objective. In addition, biocybernetic systems that monitor changes in physiology to trigger adaptive system responses take the concept further – these systems don’t merely represent the activity of the nervous system, they are capable of acting on the basis of this activity, completely bypassing human awareness if necessary. That prospect may alarm many but one shouldn’t be too disturbed – the autonomic nervous system routinely does hundreds of things every minute just to keep us conscious and alert – without ever asking or intruding on consciousness. Of course the process of autonomic control can run amiss, take panic attacks as one example, and it is telling that biofeedback represents one way to correct this instance of autonomic malfunction. The therapy works by making a hidden activity quantifiable and open to inspection, and in doing so, provides the means for the individual to “retrain” their own autonomic system via conscious control. This dynamic runs through those systems concerned with biocybernetic control and ambulatory monitoring. Changes at the user interface provide feedback on emotion or cognition and invite the user to extend self-awareness, and in doing so, to enhance control over their own central nervous systems. As N. Katherine Hayles puts it in her book on posthumanism: “When the body is integrated into a cybernetic circuit, modification of the circuit will necessarily modify consciousness as well. Connected to multiple feedback loops to the objects it designs, the mind is also an object of design.”

So, really what we’re talking about is extending our human nervous systems via technology and in doing so, enhancing our ability to self-regulate our human nervous systems. To slightly adapt a phrase from the autopoietic analysis of the nervous system, we are observing systems observing ourselves observing (ourselves).

It has been argued by Rosalind Picard among others that increased self-awareness and self-control of bodily states is a positive aspect of this kind of technology. In some cases, such as anger management and stress reduction, I can see clear arguments to support this position. On the other hand, I can also see potential for confusion and distress due to disembodiment (I don’t feel angry but the computer says I do – so which is me?) and invasion of privacy (I know you say you’re not angry but the computer says you are).

If we are to extend the nervous system, I believe we must also extend our conception of the self – beyond the boundaries of the skull and the skin – in order to incorporate feedback from a computer system into our strategies for self-regulation. But we should not be sucked into a simplistic conflicts by these devices. As N. Katherine Hayles points out, border crossings between humans and machines are achieved by analogy, not simple re-representation – the quantified self out there and the subjective self in here occupy different but overlapping spheres of experience. We must bear this in mind if we, as users of this technology, are to reconcile the plentitude of embodiment with the relative sparseness of biofeedback.