With regards to the development of physiological computing systems, whether they are BCI applications or fall into the category of affective computing, there seems (to me) to be two distinct types of research community at work. The first (and oldest) community are university-based academics, like myself, doing basic research on measures, methods and prototypes with the primary aim of publishing our work in various conferences and journals. For the most part, we are a mixture of psychologists, computer scientists and engineers, many of whom have an interest in human-computer interaction. The second community formed around the availability of commercial EEG peripherals, such as the Emotiv and Neurosky. Some members of this community are academics and others are developers, I suspect many are dedicated gamers. They are looking to build applications and hacks to embellish interactive experience with a strong emphasis on commercialisation.

There are many differences between the two groups. My own academic group is ‘old-school’ in many ways, motivated by research issues and defined by the usual hierarchies associated with specialisation and rank. The newer group is more inclusive (the tag-line on the NeuroSky site is “Brain Sensors for Everyone”); they basically want to build stuff and preferably sell it.

Any tension between both communities stems largely from different kinds of motivation. Also, the academic community tends towards the conservative whereas the second group, fuelled by marketeer-speak of “mind-reading” and “reading thoughts”, are much more adventurous and optimistic about the capabilities of the technology: see this recent article from the WSJ as an example. When I think of the two groups, I’m tempted by an analogy from Aesop’s fable of the Tortoise and the Hare, but that doesn’t really fit because the two communities are not running in the same race.

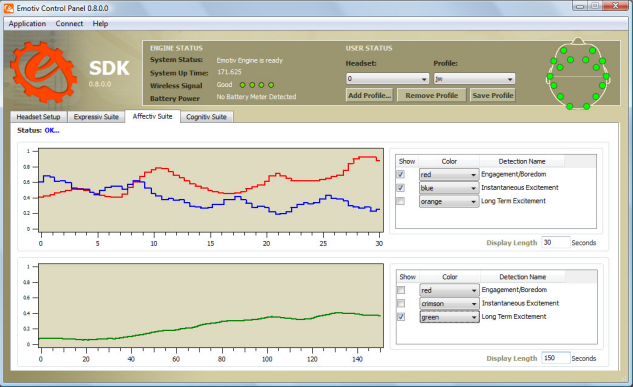

Recently I had the opportunity to play around with a commercial EEG device and took a look at the tools available to both the academic research community and software developers. Both Emotiv and NeuroSky have adopted a two-tier approach. EEG data is presented in two forms, what I’ll call a black box and glass box versions. The Emotiv comes with three different ‘suites’ designed to capture facial expression, emotional states and cognitive states. Below is a screen shot from the Affectiv (emotional) suite.

As you can see, engagement and boredom are presented as a red line on an unidimensional scale (i.e. high engagement = low boredom and vice versa). There are two other plots available that correspond to long- and short-term excitement. This is a black box approach in that there is an algorithm working here, possibly on the relationship between alpha and beta waves, that produces a standardised score between 0 and 1. For the developer with no specific training in EEG collection or analysis, this algorithm is an ideal way to get started with data collection and to experiment with the system. For the academic of course, this kind of approach does not work at all. We do not like to work with algorithms that have not been formally validated in a peer-reviewed output. Of course we are not so naive that we expect a commercial company to publish the detailed formula behind the algorithm, but speaking personally, I’m a little disappointed that the data collection exercise underpinning the development of these algorithms is not in the public domain (there is a reference to 1000s of hours of data recording in this less-than-probing feature in Wired UK magazine a few years ago). NeuroSky have a similar kind of display with ‘Meditation’ scores that are black box algorithms.

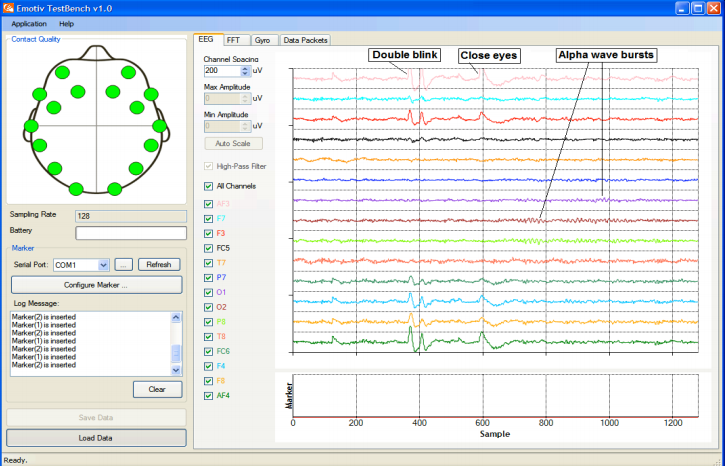

To their credit, both Emotiv and NeuroSky would like the research community to use their sensors and provide access to raw data as you would expect with laboratory-grade EEG apparatus. Below is a screen shot from Test Bench, which is the Emotiv system. These raw data can be further analysed via FFT to yield activity in the classic EEG bands.

This glass box approach works very well for the research community. This group has some expertise in working with these signals and can construct their own algorithms – so rather than developing interaction mechanics that ‘sit’ on top of an engagement score between 0 and 1, researchers can construct systems completely from the bottom-up. Of course the non-EEG expert can also access this level of detail but would need to do some reading in order to understand how to interpret different bands, particularly with respect to topography, i.e. there is a relationship between the prevalence of different EEG activity at different bandwidths and scalp sites.

As I said earlier, it’s obvious that the two-tier approach adopted by both companies represents a tacit attempt to meet the needs of both kinds of community, but what happens when we see a degree of “crossover”? The first kind and the easiest to describe is when an academic submits a research paper that is constructed on a black box algorithm. This does happen occasionally and is completely inappropriate because we’re unable to understand why a particular system design or interaction dynamic worked or did not work – because the measures driving the interaction remain secret. Also, I’m a great believer in multidisciplinary teams working on physiological computing system, so when I see this kind of thing in academia, it kind of bugs me – because I perceive the use of these black box algorithms as a way of shutting psychologists like me out of the picture.

The same kind of problem can arise for the developer working with a commercial system. There are many barriers to consistent system performance when one is working with ambulatory EEG sensors in the field (as opposed to a controlled laboratory environment). First of all, you have to get the system to work in the way that it was designed to work. Then, you have to get it to work with a range of different people (EEG is affected by skull thickness, there are individual differences in alpha wave production etc. etc.). Finally, you have to get it to work with a range of people across a number of different environments (in a sitting room, on a train). What happens if (or rather when) the developer must enter troubleshooting mode? The developer can play with various criteria, calibration and triggering levels, but if that doesn’t work, they must wonder if the black box algorithm is working for their particular application. And if they want to explore the raw signals, this opens up a Pandora’s Box of possibilities – because when you are dealing with power in different EEG bandwidths across different sites, the complexity of getting the system to work just doubled and may subsequently triple (even with a respectable degree of expertise).

I guess what I’m saying amounts to this: black box algorithms are only genuinely useful if you know how they work, otherwise troubleshooting (in a thorough sense) is impossible. On the other hand, glass box interfaces like the Test Bench above, are only really useful if you have a good deal of EEG expertise and know how to set up systematic testing under controlled conditions.

My concern (and this is not confined to Emotiv but applies equally to NeuroSky) is that many developers are sufficiently knowledgable to build stuff using these sensors but lack sufficient expertise to effectively troubleshoot their systems – to make them work across individuals and in different settings. This is not intended as a slur or patronisation, I know from personal experience just how difficult troubleshooting these interaction dynamics can be (and I’ve never tried to do it outside of the laboratory). There are dozens of reasons why your system may not work, it could be: the wrong measures, incorrect interpretation, interference from eye blinks and facial muscles, gross movement artifacts, lag between analysis and response at the interface etc. etc. Unfortunately we’re yet to produce a development protocol to support the construction and evaluation of a biocybernetic loop, which I believe is a necessary stage for developers and researchers alike to fully exploit these kind of apparatus.

Great point on the different standards and potential collaboration of two very different communities – I recently saw this video of “EEG” and music creation, which features a great project (with prominent display of the sponsor). The heavy editing makes a clear analysis of what is actually done by the musicians impossible – but I believe that this work and many others show how valuable these sensor systems can be (even without restricting oneself to the use of purely neurophysiological signal sources). It is a great idea and time to start developing standards and helpful guidelines to make the work of both communities come together easier..

Thanks for your comment Christian. I tried to write that post in a non-judgemental way – which is difficult for an academic 🙂 But the two communities are here and we’re starting to see some useful cross-talk; for example, I recently reviewed a paper comparing an Emotiv to a clinical-quality EEG in a systematic way. On the other hand, I’ve also been asked to review work where the researchers relied solely on the propriety software for the Emotiv for their EEG quantification, which is inappropriate for peer review. I agree that the sensor systems are valuable and have great utility for developing systems to be used in the field with minimal set-up. I’m just frustrated commercial interests inevitably lead to a lot of hype and a lack of transparency about the analysis software developed for these commercial systems. The absence of a solid foundation makes building, developing and selling “brain sensors for everyone” much harder for developers than it really needs to be.

Pingback: EEG + Processing | Kaleidoscope of the universes