In last week’s excellent Bad Science article from The Guardian, Ben Goldacre puts his finger on a topic that I think is particularly relevant for physiological computing systems. He quotes press reports about MRI research into “hypoactive sexual desire response” – no, I hadn’t heard of it either, it’s a condition where the person has low libido. In this study women with the condition and ‘normals’ viewed erotic imagery in the scanner. A full article on the study from the Mail can be found here but what caught the attention of Bad Science is this interesting quote from one of the researchers involved: “Being able to identify physiological changes, to me provides significant evidence that it’s a true disorder as opposed to a societal construct.”

Author Archives: Steve Fairclough

Valve experimenting with physiological input for games

This recent interview with Gabe Newell of Valve caught our interest because it’s so rare that a game developer talks publicly about the potential of physiological computing to enhance the experience of gamers. The idea of using live physiological data feeds in order to adapt computer games and enhance game play was first floated by Kiel in these papers way back in 2003 and 2005. Like Kiel, in my writings on this topic (Fairclough, 2007; 2008 – see publications here), I focused exclusively on two problems: (1) how to represent the state of the player, and (2) what could the software do with this representation of the player state. In other words, how can live physiological monitoring of the player state inform real-time software adaptation? For example, to make the game harder or to increase the music or to offer help (a set of strategies that Kiel summarised in three categories, challenge me/assist me/emote me)- but to make these adjustments in real time in order to enhance game play.

Mobile Monitors and Apps for Physiological Computing

I always harbored two assumptions about the development of physiological computing systems that have only become apparent (to me at least) as technological innovation seems to contradict them. First of all, I thought nascent forms of physiological computing systems would be developed for desktop system where the user stays in a stationary and more-or-less sedentary position, thus minimising the probability of movement artifacts. Also, I assumed that physiological computing devices would only ever be achieved as coordinated holistic systems. In other words, specific sensors linked to a dedicated controller that provides input to adaptive software, all designed as a seamless chain of information flow.

Functional vocabulary: an issue for Emotiv and Brain-Computer Interfaces

The Emotiv system is a EEG headset designed for the development of brain-computer interfaces. It uses 12 dry electrodes (i.e. no gel necessary), communicates wirelessly with a PC and comes with a range of development software to create applications and interfaces. If you watch this 10min video from TEDGlobal, you get a good overview of how the system works.

First of all, I haven’t had any hands-on experience with the Emotiv headset and these observations are based upon what I’ve seen and read online. But the talk at TED prompted a number of technical questions that I’ve been unable to satisfy in absence of working directly with the system.

Continue reading

Better living through affective computing

I recently read a paper by Rosalind Picard entitled “emotion research for the people, by the people.” In this article, Prof. Picard has some fun contrasting engineering and psychological perspectives on the measurement of emotion. Perhaps I’m being defensive but she seemed to have more fun poking fun at the psychologists than the engineers, but the central impasse that she identified goes something like this: engineers develop sensor apparatus that can deliver a whole range of objective data whilst psychologists have decades of experience with theoretical concepts related to emotion, so why haven’t people really benefited from their union through the field of affective computing. Prof. Picard correctly identifies a reluctance on the part of the psychologists to define concepts with sufficient precision to aid the work of the engineers. What I felt was glossed over in the paper was the other side of the problem, namely the willingness of engineers to attach emotional labels to almost any piece of psychophysiological data, usually in the context of badly-designed experiments (apologies to any engineers reading this, but I wanted to add a little balance to the debate).

Continue reading

iBrain

I just watched a TEDMED talk about the iBrain device via this link on the excellent Medgadget resource. The iBrain is a single-channel EEG recording collected via ‘dry’ electrodes where the data is stored in a conventional handheld device such as a cellphone. In my opinion, the clever part of this technology is the application of mathematics to wring detailed information out of a limited data set – it’s a very efficient strategy.

The hardware looks to be fairly standard – a wireless EEG link to a mobile device. But its simplicity provides an indication of where this kind of physiological computing application could be going in the future – mobile monitoring for early detection of medical problems piggy-backing onto conventional technology. If physiological computing applications become widespread, this kind of proactive medical monitoring could become standard. And the main barrier to that is non-intrusive, non-medicalised sensor development.

In the meantime, Neurovigil, the company behind the product, recently announced a partnership with Swiss pharmaceutical giants Roche who want to apply this technology to clinical drug trials. I guess the methodology focuses the drug companies to consider covert changes in physiology as a sensitive marker of drug efficacy or side-effects.

I like the simplicity of the iBrain (1 channel of EEG) but speaker make some big claims for their analysis, the implicit ones deal with the potential of EEG to identify neuropathologies. That may be possible but I’m sceptical about whether 1 channel is sufficient. The company have obviously applied their pared-down analysis to sleep stages with some success but I was left wondering what added value the device provided compared to less-intrusive movement sensors used to analyse sleep behaviour, e.g. the Actiwatch

In the shadow of the polygraph

I was reading this short article in The Guardian today about the failure of polygraph technologies (including fMRI versions and voice analysis) to deliver data that was sufficiently robust to be admissible in court as evidence. Several points made in the article prompted a thought that the development of physiological computing technologies, to some extent, live in the shadow of the polygraph.

Think about it. Both the polygraph and physiological computing aim to transform personal and private experience into quantifiable data that may be observed and assessed. Both capture unconscious physiological changes that may signify hidden psychological motives and agendas, subconscious or otherwise – and of course, both involve the attachment of sensor apparatus. The convergence between both technologies dictates that both are notoriously difficult to validate (hence the problems of polygraph evidence in court) – and that seems true whether we’re talking about the use of the P300 for “brain fingerprinting” or the use of ECG and respiration to capture a specific category of emotion.

Whenever I do a presentation about physiological computing, I can almost sense antipathy to the concept from some members of audience because the first thing people think about is the polygraph and the second group of thoughts that logically follow are concerns about privacy, misuse and spying. To counter these fears, I do point out that physiological computing, whether it’s a game or a means of adapting a software agent or a brain-computer interface, has been developed for very different purposes; this technology is intended for personal use, it’s about control for the individual in the broadest sense, e.g. to control a cursor, to promote reflection and self-regulation, to make software reactive, personalised and smarter, to ensure that the data protection rights of the individual are preserved – especially if they wish to share their data with others.

But everyone knows that any signal that can be measured can be hacked, so even capturing these kinds of physiological data per se opens the door for spying and other profound invasions of privacy.

Which takes us inevitably back in the shadow of the polygraph.

I’m sure attitudes will change if the right piece of technology comes along that demonstrates the up side of physiological computing. But if early systems don’t take data privacy seriously, as in very seriously, the public could go cold on this concept before the systems have had a chance to prove themselves in the marketplace.

For musings on a similar theme, see my previous post Designing for the Guillable.

Heart Chamber Orchestra

I came across this article about the Heart Chamber Orchestra on the Wired site last week. The Orchestra are a group of musicians who wear ECG monitors whilst they play – the signals from the ECG feed directly into laptops and adapts the musical scores played directly and in real-time. They also have some nice graphics generated by the ECG running in the background when they play (see clip below). What I think is really interesting about this project is the reflexive loop set up between the ECG, the musician’s response and the adaptation of the musical score. It really goes beyond standard biofeedback – a live feed from the ECG mutates the musical score, the player responds to technical/emotional qualities of that score, which has a second-order effect on the ECG and so on. In the Wired article, they refer to the possibility of the audience being equipped with ECG monitors to provide another input to the loop – which is truly a mind-boggling possibility in terms of a fully-functioning biocybernetic loop.

The thing I find slightly frustrating about the article and the information contained in the project website is the lack of information about how the ECG influences the musical score. In a straightforward way, an ECG will yield a beat-to-beat interval, which of course could generate a metronomic beat if averaged over the group. Alternatively each individual ECG could generate its own beat, which could be superimposed over one another. But there are dozens of ways in which ECG information could be used to adapt a musical score in a real-time. According to the project information, there is also a composer involved doing some live manipulations of the score, but it’s hard to figure out how much of the real-time transformation is coming from him or her and how much is directly from the ECG signal.

I should also say that the Orchestra are currently competing for the FILE PRIX LUX prize and you can vote for them here

Before you do, you might want to see the orchestra in action in the clip below.

This is your brain giving up

Like a lot of people, I came to the area of physiological computing via affective computing. The early work I read placed enormous emphasis on how systems may distinguish different categories of emotion, e.g. frustration vs. happiness. This is important for some applications, but most of all I was interested in user states that related to task performance, specifically those states that might precede and predict a breakdown of performance. The latter can take several forms, the quality of performance can collapse because the task is too complex to figure out or you’re too tired or too drunk etc. What really interested me was how performance collapsed when people simply gave up or ‘exhibited insufficient motivation’ as the psychological textbooks would say.

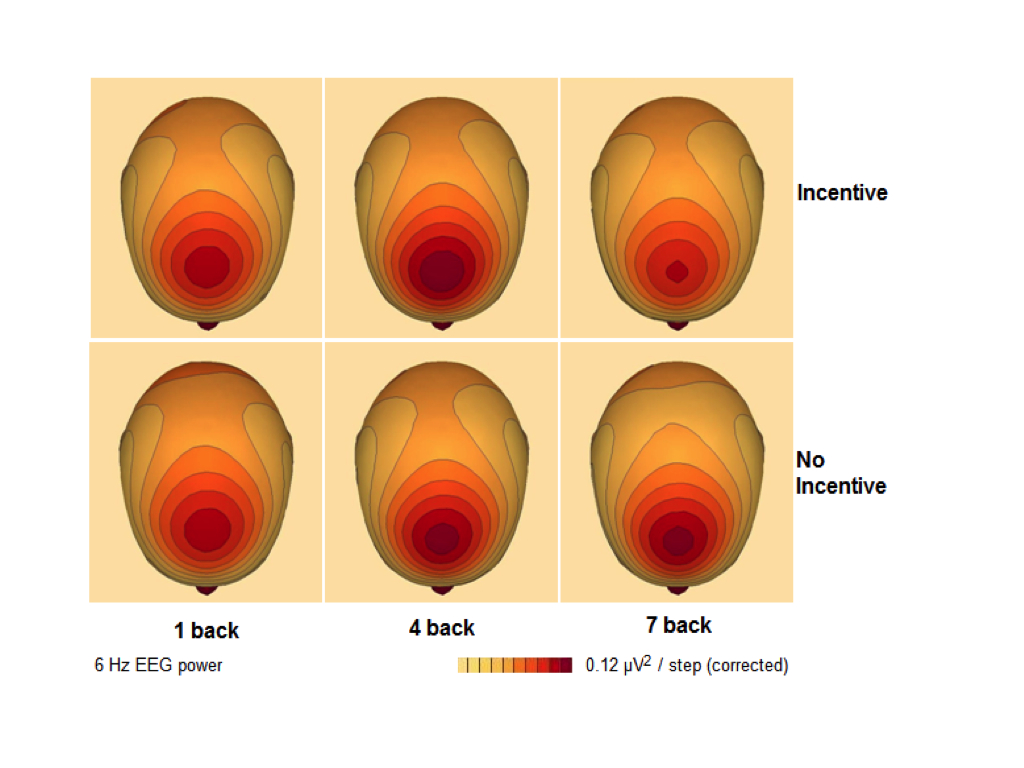

People can give up for all kinds of reasons – they may be insufficiently challenged (i.e. bored), they may be frustrated because the task is too hard, they may simply have something better to do. The prediction of motivation or task engagement seems very important to me for biocybernetic adaptation applications, such as games and educational software. Several psychology research groups have looked at this issue by studying psychophysiological changes accompanying changes in motivation and responses to increased task demand. A group led by Alan Gevins performed a number of studies where they incrementally ramped up task demand; they found that theta activity in the EEG increased in line with task demands. They noted this increase was specific to the frontal-central area of the brain.

We partially replicated one of Gevins’ studies last year and found support for changes in frontal theta. We tried to make the task very difficult so people would give up but were not completely successful (when you pay people to come to your lab, they tend to try really hard). So we did a second study, this time making the ‘impossible’ version of the task really impossible. The idea was to expose people to low, high and extremely high levels of memory load. In order to make the task impossible, we also demanded participants hit a minimum level of performance, which was modest for the low demand condition and insanely high for the extremely high demand task. We also had our participants do each task on two occasions; once with the chance to win cash incentives and once without.

The results for the frontal theta are shown in the graphic below. You can clearly see the frontal-central location of the activity (nb: the more red the area, the more theta activity was present). What’s particularly interesting and especially clear in the incentive condition (top row of graphic) is that our participants reduced theta activity when they thought they didn’t have a chance. As one might suspect, task engagement includes a strong component of volition and brain activity should reflect the decision to give up and disengage from the task. We’ll be following up this work to investigate how we might use the ebb and flow of frontal theta to capture and integrate task engagement into a real-time system.

The Extended Nervous System

I’d like to begin the new year on a philosophical note. A lot of research in physiological computing is concerned with the practicalities of developing this technology. But what about the conceptual implications of using these systems (assuming that they are constructed and reach the marketplace)? At a fundamental level, physiological computing represents an extension of the human nervous system. This is nothing new. Our history is littered with tools and artifacts, from the plough to the internet, designed to extend the ‘reach’ of human senses capabilities. As our technology becomes more compact, we become increasingly reliant on tools to augment our cognitive capacity. This can be as trivial as using the address book on a mobile phone as a shortcut to “remembering” a friend’s number or having an electronic reminder of an imminent appointment. This kind of “scaffolded thinking” (Clark, 2004) represents a merger between a human limitation (long-term memory) and a technological solution, we’ve effectively subcontracted part of our internal cognitive store to an external silicon one. Andy Clark argues persuasively in his book that these human-machine mergers are perfectly natural consequence of human-technology co-evolution.

If we use technology to extend the human nervous system, does this also represent a natural consequence of the evolutionary trajectory that we share with machines? It is one thing to delegate information storage to a machine but granting access to the central nervous system, including the inner sanctum of the brain, represents a much more intimate category of human-machine merger.

In the case of muscle interfaces, where EMG activity or eye movements function as proxies of a mouse or touchpad input, I feel the nervous system has been extended in a modest way – gestures are simply recorded at a different place, rather than looking and pointing, you can now just look. BCIs represent a more interesting case. Many are designed to completely circumvent the conventional motor component of input control. This makes BCIs brilliant candidates for assistive technology and effective usage of a BCI device feels slightly magical – because it is the ultimate in remote control. But like muscle interfaces, all we have done is create an alternative route for human-computer input. The exciting subtext to BCI use is how the user learns to self-regulate brain activity in order to successfully operate this category of technology. The volitional control of brain activity seems like an extension of the human nervous system in my view (or to be more specific, an extension of how we control the human nervous system), albeit one that occurs as a side effect or consequence of technology use.

Technologies based on biofeedback mechanics, such as biocybernetic adaptation and ambulatory monitoring, literally extend the human nervous system by transforming a feeling/thought/experience that is private, vague and subjective into an observable representation that is public, quantified and objective. In addition, biocybernetic systems that monitor changes in physiology to trigger adaptive system responses take the concept further – these systems don’t merely represent the activity of the nervous system, they are capable of acting on the basis of this activity, completely bypassing human awareness if necessary. That prospect may alarm many but one shouldn’t be too disturbed – the autonomic nervous system routinely does hundreds of things every minute just to keep us conscious and alert – without ever asking or intruding on consciousness. Of course the process of autonomic control can run amiss, take panic attacks as one example, and it is telling that biofeedback represents one way to correct this instance of autonomic malfunction. The therapy works by making a hidden activity quantifiable and open to inspection, and in doing so, provides the means for the individual to “retrain” their own autonomic system via conscious control. This dynamic runs through those systems concerned with biocybernetic control and ambulatory monitoring. Changes at the user interface provide feedback on emotion or cognition and invite the user to extend self-awareness, and in doing so, to enhance control over their own central nervous systems. As N. Katherine Hayles puts it in her book on posthumanism: “When the body is integrated into a cybernetic circuit, modification of the circuit will necessarily modify consciousness as well. Connected to multiple feedback loops to the objects it designs, the mind is also an object of design.”

So, really what we’re talking about is extending our human nervous systems via technology and in doing so, enhancing our ability to self-regulate our human nervous systems. To slightly adapt a phrase from the autopoietic analysis of the nervous system, we are observing systems observing ourselves observing (ourselves).

It has been argued by Rosalind Picard among others that increased self-awareness and self-control of bodily states is a positive aspect of this kind of technology. In some cases, such as anger management and stress reduction, I can see clear arguments to support this position. On the other hand, I can also see potential for confusion and distress due to disembodiment (I don’t feel angry but the computer says I do – so which is me?) and invasion of privacy (I know you say you’re not angry but the computer says you are).

If we are to extend the nervous system, I believe we must also extend our conception of the self – beyond the boundaries of the skull and the skin – in order to incorporate feedback from a computer system into our strategies for self-regulation. But we should not be sucked into a simplistic conflicts by these devices. As N. Katherine Hayles points out, border crossings between humans and machines are achieved by analogy, not simple re-representation – the quantified self out there and the subjective self in here occupy different but overlapping spheres of experience. We must bear this in mind if we, as users of this technology, are to reconcile the plentitude of embodiment with the relative sparseness of biofeedback.