A video about the exhibit myself and Steve have been collaborating on with Manifest.AR is now online. The exhibit is currently being shown at FACT Liverpool until the 15th September.

Author Archives: Kiel Gilleade

Manifest.AR Show and Tell

For the past two weeks me and Steve have been working with Manifest.AR, a collective of AR artists, on an exhibit to be shown at FACT in February 2013. The exhibit has been commissioned as part of the ARtSENSE project which we work on.

At the end of the two weeks a public show and tell event was held at FACT on the current works in progress.

Continue reading

Manifest.AR Show and Tell

For the past two weeks me and Steve have been working with Manifest.AR, a collective of AR artists, on an exhibit to be shown at FACT in February 2013. The exhibit has been commissioned as part of the ARtSENSE project which we work on.

At the end of the two weeks a public show and tell event was held at FACT on the current works in progress.

Continue reading

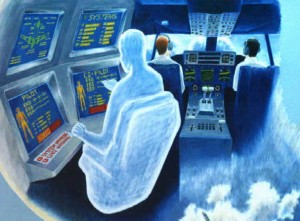

The biocybernetic loop: A conversation with Dr Alan Pope

The biocybernetic loop is the underlying mechanic behind physiological interactive systems. It describes how physiological information is to be collected from a user, analysed and subsequently translated into a response at the system interface. The most common manifestation of the biocybernetic loop can be seen in traditional biofeedback therapies, whereby the physiological signal is represented as a reflective numeric or graphic (i.e. representation changes in real-time to the signal).

In the 90’s a team at NASA published a paper that introduced a new take on the traditional biocybernetic loop format, that of biocybernetic adaptation, whereby physiological information is used to adapt the system the user is interacting with and not merely reflect it. In this instance the team had implemented a pilot simulator that used measures of EEG to control the auto-pilot status with the intent to regulate pilot attentiveness.

Dr. Alan Pope was the lead author on this paper, and has worked extensively in the field of biocybernetic systems for several decades; outside the academic community he’s probably best known for his work on biofeedback gaming therapies. To our good fortune we met Alan at a workshop we ran last year at CHI (a video of his talk can be found here) and he kindly allowed us the opportunity to delve further into his work with an interview.

So follow us across the threshold if you will and prepare to learn more about the origins of the biocybernetic loop and its use at NASA along with its future in research and industry.

Continue reading

CFP – Brain Computer Interfaces Grand Challenge 2012

The field of Physiological Computing consists of systems that use data from the human nervous system as control input to a technological system. Traditionally these systems have been grouped into two categories, those where physiological data is used as a form of input control and a second where spontaneous changes in physiology are used to monitor the psychological state of the user. The field of Brain-Computer Interfacing (BCI) traditionally conceives of BCIs as a controller for interfaces, a device which allows you to “act on” external devices as a form of input control. However, most BCIs do not provide a reliable and efficient means of input control and are difficult to learn and use relative to other available modes. We propose to change the conceptual use of “BCI as an actor” (input control) into “BCI as an intelligent sensor” (monitor). This shift of emphasis promotes the capacity of BCI to represent spontaneous changes in the state of the user in order to induce intelligent adaptation at the interface. BCIs can be increasingly used as intelligent sensors which “read” passive signals from the nervous system and infer user states to adapt human-computer, human-robot or human-human interaction (HCI, HRI, HHI). This perspective on BCIs challenges researchers to understand how information about the user state should support different types of interaction dynamics, from supporting the goals and needs of the user to conveying state information to other users. What adaptation to which user state constitutes opportune support? How does the feedback of the changing HCI and human-robot interaction affect brain signals? Many research challenges need to be tackled here.

Quantified Self Europe 2011 Videos

The Quantified Self Europe presentation videos are now online. Enjoy!

Reflections on Quantified Self Europe 2011

Last month I attended the inaugural Quantified Self Europe conference over in Amsterdam. I was there to present a follow-up talk to one I gave back in 2010 at Quantified Self London in which I described my experiences in tracking my heart rate along with publishing it in real-time over the Internet.

The Body Blogger system as it became known, after a term Steve came up with back in 2009, was only really intended to be used to demonstrate what could be done with the BM-CS5 heart monitors we’d recently purchased. As these devices allowed wireless real-time streaming of multiple heart rate monitors to a single PC there was a number of interaction projects we wanted to try out and using web services to manage the incoming data and provide a platform for app development seemed the best choice to realise our ideas (see here and here for other stuff we’ve used The Body Blogger engine for).

Having tracked and shared my heart rate for over a year now I’ve pretty much exhausted what I can do with the current implementation of the system which I didn’t spend a whole lot of time developing in the first place (about a day on the core) and so my Amsterdam talk was pretty much a swan song to my experiences. During the summer, I stopped tracking my heart rate (then fell sick for the first time since wearing the device, go figure I’d of liked to of captured that) so I could work on the next version of the system and loaned out the current one to Ute who was interested in combining physiological monitoring with a mood tracking service. Ute was also in attendance at Amsterdam to present on her experiences with body blogging and mood tracking (for more information on this see the proposal and first impressions posts).

As with my previous reflections post, I’ve posted rather late so if your interested in what happened at the conference check out the following write-ups: – Guardian, Tom Hume, and Alexandra Carmichael. The videos of the day I imagine will be online in the coming month or so, so if your interested in the particulars of my talk you’ll need to wait just a little bit longer, though I do cover a few of the topics I talked about during my CHI 2011 (video) talk (on the issues associated with inference and sharing physiological data).

Continue reading

CHI 2011 Workshop – NASA does Biofeedback Gaming on the Wii

In our final workshop video Alan Pope presents “Movemental”: Integrating Movement and the Mental Game (PDF). For the uninitiated Alan Pope co-authored a paper back in the early 90’s which introduced the concept of bio-cybernetic adaptation which has become a key work for us in the field of Physiological Computing. It was with much excitement that we received a paper submission from Alan and it was great to have him talk shop at the event.

Alan’s latest work with his colleague Chad Stephens described several new methods of adapting controller interfaces using physiology, in this case a Wii game controller. I was going to release the original footage I recorded during the workshop, however the camera failed to pick up any of the game demo’s that were shown. As one of my particular research fancies are biofeedback based game mechanics (e.g. lie-detection, sword fighting) I’ve remade the video with Alan’s permission using his power point presentation and so the demo’s can be enjoyed in all their glory.

(Pope, A., Stephens, C.) “Movemental”: Integrating Movement and the Mental Game (PDF)

A videogame or simulation may be physiologicallymodulated to enhance engagement by challenging the user to achieve a target physiological state. A method and several implementations for accomplishing this are described.

So that’s the end of our workshop video series. I hope you’ve all enjoyed them, for now I’m going to hibernate for a month to recover from the editing process.

CHI 2011 Workshop – Session 4 “Sharing the Physiological Experience” Videos Online

This week see’s the release of the talks presented during the Sharing the Physiological Experience session. To view these talks and more please click here. For guidance about the session 4 talks please consult the abstracts listed below.

This release marks the end of the CHI 2011 Brain and Body Designing for Meaningful Interaction workshop videos. I’d like to thank our presentators for allowing us to share their talks on the Internet and for choosing our workshop to present their research. Without you the workshop could not of been the success it was. Hopefully these videos will go some small way to bringing your excellent research to a wider audience, and if not they can always be used to explain what exactly you do to family and friends.

CHI 2011 Workshop – Session 3 “Evaluating the User Experience” Videos Online

This week see’s the release of the talks presented during the Evaluating the User Experience session. To view these talks and more please click here. For guidance about the session 3 talks please consult the abstracts listed below.